- Implementation strategies for adaptive digital audio effects

- A-DAFX adaptive digital effects

- Adaptive digital audio effects (A-DAFX) : a new class of sound transformations

- Adaptive audio effects based on STFT, using a source filter model

- Driving pitch-shifting and time-scaling algorithms with adaptive and gestural techniques

- An interdisciplinary approach to audio effect classification

In the first article they speak of mapping functions and a control curve. Also the control scaling part is nice.

I'm going to read the other ones now too, because I tried some other things in matlab, but they wouldn't work well: a little bit more explanation:

- I implemented the basics of the HPS algorithm as promised yesterday. Basically it's allright, but I don't know if it works because firstly I didn't yet implement the deciding code (which decides which peak is the current pitch), secondly because I didn't think about frames or blocks (Maybe this is not necessary, but I didn't yet think about this), thirdly because there's a seemingly stupid thing happening in matlab: it doesn't recognize the file I want to play. (Solved: I have to give the attributes as a string, so encapsulated with ' ' )

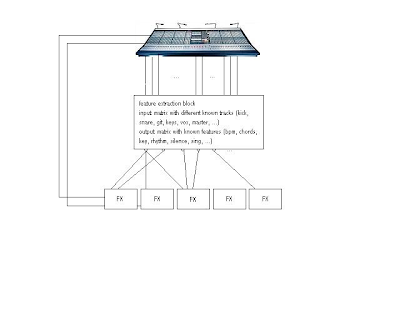

- I also tried to make an applyEffect.m-like file that selects the extraction algorithm and effect, and then reads the file, applies the effect with the parameters from the extraction algorithm and plays the file. This is complexer than I thought, so I need to read or think about the control curve some more.